Introduction

Social interactions are a fundamental aspect of human life involving the reciprocal exchange between two or more socially engaged individuals.1,2 The ability to navigate a wide range of social-interactive contexts efficiently reflects the interplay of several different neurocognitive systems. Interacting individuals may simultaneously engage attentional resources to monitor one another,3 process linguistic information to comprehend speech and form replies,4 and utilize perspective-taking to infer interaction partners’ thoughts and predict their actions.5–7 Despite this complexity, the constraints imposed by modern human neuroimaging modalities have limited much of the research on social interaction to non-interactive, “third-person”, experimental paradigms that involve static social stimuli or the passive observation of social interaction.1,2,8 The focus on non-interactive, “third-person” approaches is problematic because emerging research has demonstrated that active engagement in social interactive behavior is associated with brain activity that is distinct from what is elicited by the passive observation of social interaction.1,2,9–11 Thus, a neuroscientific understanding of human social interaction requires novel neuroimaging approaches that situate participants in real-time interactions with others.

The second-person neuroscience framework2 provides a theoretically motivated starting point for investigating the brain systems underlying human social interaction. According to this framework, social interaction has two core dimensions: social engagement (originally formulated as emotional engagement) and interaction (Figure 1). Social engagement is the subjective, emotional feeling of involvement with and awareness of a social partner (real or perceived), which creates a shared psychological state that enables interaction.12,13 Research has shown that implicit awareness of a real-time social partner, even without physical proximity, impacts a range of cognitive, affective, and behavioral processes.14,15 Interaction refers to the contingent, reciprocal exchanges between individuals, in which the actions of one partner affects the other’s actions.1,16 Interactive neuroimaging studies often assign participants to the role of either initiator or responder to the interactive behavior of a social partner (e.g., sharing an opinion versus answering questions17,18). These roles (i.e., initiator or responder) constitute an orthogonal dimension of interactive behavior that can be investigated with brain imaging.19,20 Importantly, because interaction does not have to be social (e.g., playing chess against a computer) and social engagement can occur without reciprocal interaction (e.g., people-watching at a park), the second-person framework proposes that the neurocognitive processes underlying social interaction are different from those involved in social engagement or interaction (i.e., reciprocity) alone.2 Therefore, we operationalize social interaction neuroimaging studies as involving both real-time social engagement and reciprocal interaction between social partners, which encompasses a wide range of interactive experimental paradigms (e.g., games, text messages, facial expressions, etc.) across various areas of neuroimaging research.1,21

In the decade since the call for a second-person neuroscience,2 considerable progress has been made in functional magnetic resonance imaging (fMRI) research.1 There are numerous methods for investigating social interaction in fMRI environments, including audio-visual set-ups for interactions with out-of-scanner partners,22–24 technological approaches for digitally-mediated real-time interactions,25,26 and experimental protocols for deceiving participants to believe that they are engaging with a real-life social partner.1,10,27 This work has shown that minimal social engagement manipulations (i.e., telling participants that they are being observed) can evoke observable changes in physiology, behavior, and brain activity.28,29 Moreover, research has uncovered neural sensitivity to characteristics of the interaction partner that may be important for the driving participants’ level of social engagement, such as social closeness (e.g., friend versus stranger30), human-likeness,31 and perceived agency.32–35 Methodological advancements in fMRI data analysis have also provided insights into the brain mechanisms associated with interactive social behavior. For instance, numerous studies have demonstrated that dynamic, inter-brain coupling underlies the coordination of reciprocal, interactive behaviors and achieving shared understanding between interaction partners across different social contexts (e.g., conversation, joint action, etc.36–41). These findings support emerging active inference and predictive processing accounts about human brain function that propose social interaction partners mutually implement recursive predictions about each other’s thoughts, feelings, and behaviors, which is made possible through the computations occurring across several neurocognitive systems.7,42,43

Extant second-person neuroscience studies suggest that social interaction is associated with brain activity that cuts across several large-scale brain networks – including the default mode network (DMN), reward system, attention and cognitive control networks – that are utilized in a variety of social interactive contexts.44–46 For instance, research has shown that brain regions associated with reasoning about another’s mental state (i.e., the mentalizing network that overlaps with DMN), exhibit sensitivity to a perceived social partner regardless of mentalizing task demands,18,23,47 suggesting that people may spontaneously engage mentalizing processes in social contexts. Other research on joint attention, or the shared focus by two or more people on a common object or event, implicates overlapping frontoparietal regions both when participants initiate and respond to eye-gaze-based social exchanges.19,20,48,49 This finding suggests that attentional and cognitive control systems may be important for social interaction. However, it remains unclear whether the findings are specific to the demands of coordinating gaze-based visual attention or if they reflect more general dyadic processes utilized in other social interactive contexts. Furthermore, second-person studies have revealed that interactions with human but not computer partners elicit brain activity in key regions of the reward system,18,50 indicating that motivational processes may distinguish social versus non-social interaction. While these findings have expanded our understanding of the neurocognitive processes contributing to social interaction, the diversity of task paradigms and brain areas reported across studies raises questions about the brain systems commonly engaged across diverse social interaction contexts and their relation to core components of social interactive behavior.

Coordinate-based meta-analysis (CBMA) is a data-driven approach to map the spatial convergence of brain activity reported across fMRI and positron emission tomography (PET) studies and thus provides a way to identify regions common to social interaction across a variety of tasks.51 To date, three CMBA studies have examined social interaction.45,52,53 Arioli and Canessa52 investigated brain regions associated with the observation of social interaction, and uncovered convergence in the dorsal medial prefrontal cortex (dMPFC), posterior superior temporal sulcus, gyrus (pSTS), and precuneus. This study focused exclusively on non-interactive studies, so the results do not reveal information regarding brain regions common to active engagement in social interactive behavior. Conversely, Prince and Brown53 investigated partnered interaction using second-person studies that compared against control conditions that did not involve a live interaction partner, and found significant convergence in the right temporoparietal junction (TPJ). However, this study used a limited set of social interaction-related search terms that yielded a small number of studies, which excluded large swaths of the neuroimaging literature that use second-person approaches without explicitly being about social interaction per se.

Feng and colleagues45 conducted the most comprehensive social interaction CBMA to date. Focusing on studies that compared social interaction and non-social control conditions, this larger-scale study found significant convergence in the dMPFC into the dorsal anterior cingulate cortex (dACC), bilateral anterior insulae (AI), right TPJ, left inferior frontal gyrus (IFG), posterior cingulate (PCC), left inferior parietal lobule (IPL), and precuneus.45 While informative, there are some limitations to the interpretability of these findings. For instance, the analysis included many studies wherein participants did not interact with a social partner in real-time, but, rather, responded to decisions made by their partner in the past.54 This may miss brain systems that are important for reciprocal, real-time exchanges that occur between partners. Moreover, Feng and colleagues’ pooled analysis included data from contrasts that do not necessarily isolate brain activity specific to social interaction (e.g., comparisons of win versus loss trials during game-play with a social partner).55 Those contrasts distinguished brain activity associated with positive and negative outcomes rather than targeting brain activity underlying social interaction. These limitations do not undermine the importance of their work but highlight the need for a clear operationalization of social interaction neuroimaging studies.

The objectives of the current study were to: 1) elucidate brain regions that are commonly reported across fMRI and PET investigations that utilize social interactive approaches; 2) disentangle common and separable brain regions associated with core components of social interactive behavior (i.e., engagement, interaction, and initiating/responding roles); and 3) explore the brain systems and cognitive functions associated with these regions. To this end, we conducted an exhaustive search for neuroimaging studies that targeted brain activity associated with social interaction by contrasting against a non-socially interactive control condition and used CBMA to spatially map significant convergence across this diverse literature. We used subsets of studies to evaluate whether dissociable brain structures underlie important aspects of social interactive behavior. This included identifying regions that may be more specific to social engagement or interactive behavior and those involved in initiating versus responding behaviors during social interaction. Finally, we utilized meta-analytic connectivity modeling (MACM) and functional decoding analyses to identify brain networks and cognitive functions, respectively, associated with each of the brain regions uncovered in the overarching meta-analysis.

Methods

Inclusion Criteria

Inclusion criteria for studies used in the meta-analytic investigation were: 1) fMRI or PET studies that use experimental paradigms meeting our definition of social interaction – wherein participants engage with one or more social partners in a real-time reciprocal exchange; 2) reported 3D coordinates for brain activity in either Montreal Neurological Institute (MNI) or Talairach space from whole-brain contrasts targeting social interaction by comparisons against a control task condition that does not meet the criteria for social interaction; 3) data collected from a non-clinical, drug-free, and healthy sample of participants.

The first criterion operationalizes social interaction neuroimaging studies as meeting the two Social Engagement and Interaction sub-criteria. This criterion applies to the experimental condition of interest. Social engagement is when a participant is aware of and engaged with a real-life social partner, or at least believes they are. The minimal requirement of participants’ belief is based on research demonstrating that implicit awareness of even a physically distant real-time social partner impacts a range of neural and cognitive processes.1,14,15 Social engagement can be achieved through audio-visual setups for engaging with out-of-scanner partners,24,28,56 physical proximity to or contact with a partner in the scanning environment,57–59 digital communication platforms (e.g., texting, direct messaging) for engaging with distant partners,17,18 as well as, and often in combination with, experimental protocols designed to deceive participants into believing that they are engaged with a real-life partner.50,60 The Interaction sub-criterion requires that at least one reciprocated, interactive behavior occurs between participant and social partner, such that the action of one interactant (either participant or partner) affects the action of the other.1,13 The reciprocated behavior does not have to be immediate or direct, as long as it is influenced by or contingent on the prior behavior of the other.23,60 The minimal behavioral criteria of action-response contingencies aims to target the brain systems commonly engaged for interpersonal behavior, rather than the intrapersonal processes comprising each individual’s behavior.16,36 Interaction in neuroimaging can thus take many forms ranging from simple pairs of initiating and responding behaviors19 to complex conversations and game play.61,62

The second criterion refers to the control condition used in the study. Specifically, the control should isolate brain activity associated with social interaction by controlling for non-social interaction-related brain activity. For example, contrasts showing greater brain activity (denoted using the greater than symbol, “>”) for playing rock-paper-scissors against human compared to computer opponents controls for the neurocognitive processes required by the game (e.g., working memory), while isolating brain activity that is specific to interaction with a person (e.g.32). Contrasts for isolating brain activity associated with social interaction include but are not limited to: human > computer interaction, social interaction > solo action, and live interaction > non-live observation. Moreover, this criterion requires whole-brain contrasts to ensure unbiased results and excludes studies that only report results from region of interest (ROI) analysis.

Finally, the third criterion is intended to constrain the search to normative brain responses and excludes studies that only report data from non-healthy or clinical samples, between group contrasts against non-healthy or clinical samples, and drug manipulations.

Search Strategy and Systematic Search

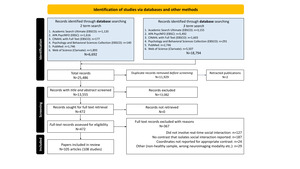

Following best practices, we used an iterative approach to conduct a comprehensive, unbiased search of the neuroimaging literature for studies using social interaction.63 The final search strategies are included in detail in Supplemental Materials: Search Strategies. Initially, we screened all citations in relevant review articles,1,45,46 which yielded 27 fMRI papers18,23,26,31,32,47,49,50,57,64–81 that met our inclusion criteria. These articles were used to develop an initial list of keywords used to search the PubMed (National Library of Medicine) database. The search results were checked to ensure that the original 27 articles appeared in the results if they were indexed in the database. If any were missing, the keyword selection and search strategy were refined to improve recall. For original articles that did not come up in the search, additional keywords (e.g., MeSH, subject terms, and modifiers) were identified and incorporated into the search strategy. This process was iteratively repeated until all 27 articles were successfully found. The final search strategy was implemented on November 24, 2021 and involved two sets of search terms implemented in EBSCO databases (APA PsycINFO, Academic Search Ultimate, and CINAHL, and Psychology and Behavioral Sciences Collection), PubMed (National Library of Medicine), and Web of Science (Clarivate).

The first set of terms searched for the intersection of primary keywords and modifier terms specifying neuroimaging method (e.g., [“social interaction” OR “group problem solving” OR etc.] AND [“functional magnetic resonance imaging” OR “PET” etc.]). The second set of terms searched for the intersection of primary keywords related to social cognitive processes, qualifier words that specified social interactive context, and modifier terms specifying the neuroimaging methods (e.g., [“mentalizing” OR “theory of mind” etc.] AND [“human partner” OR “social context” etc.] AND [“fMRI” OR “PET” etc.]). Results from the different databases were combined in Zotero, a citation management software, and duplicates were removed, yielding an initial list of 13,555 unique records that were further screened in two phases: 1) title/abstract screening and 2) full-text screening.

The first phase involved screening the title and abstract and was conducted using Rayyan,82 a web platform for conducting systematic reviews that automatically extracts citation meta-data and facilitates blinded, independent record assessment by research teams. Screening of search results was conducted by a team of six researchers and trained research assistants. Initially, we excluded unqualifying articles that were easy to identify, like behavioral studies, studies that used incompatible human neuroimaging modalities (e.g., EEG, fNIRS, structural MRI, etc.), and studies that did not use second-person neuroscience approaches (e.g., common fMRI paradigms like the stop-signal task). Assessments for eligibility were aided by the built-in Rayyan functions that helped identify animal studies, clinical trials, articles without English translation, and other unqualifying citation types like review articles, book chapters, conference papers, and non-peer-reviewed reports like dissertations or theses. Sets of 100-200 records were randomly assigned to different team members, so that each record was independently labeled by at least two reviewers as either “Exclude” (did not qualify), “Include” (met the inclusion criteria), or “Maybe” (abstract indicated that at least one criterion was met), and conflicts between reviewers’ assessments were resolved via weekly team meetings. Inter-rater reliability was high, with 94% agreement across ratings, and 13,082 records were excluded in the first phase.

The same iterative process was conducted in the second phase of screening. The full text of the remaining 472 records were thoroughly screened to determine whether they met the inclusion criteria (see Supplemental Materials: Article Screening Checklist). Each full test article was screened by at least two team members and disagreements were resolved through team meetings, but the final determination for inclusion was made by the senior members of the research team (co-authors JSM and ER). Inter-rater reliability was lower during phase two (81% agreement across ratings), and 367 articles were excluded: 127 for not meeting criteria for social interaction; 187 for not including a qualifying contrast; 24 because whole-brain coordinates from qualifying contrasts were not reported in the main text or supplemental materials and could not be obtained through author contact; 14 articles for using the wrong imaging modality or analytic approach (e.g., structural MRI, resting-state functional connectivity); and 15 for analyses including clinical or non-healthy samples. This process yielded 108 qualifying fMRI and PET studies across 105 articles that were used for CBMA. Most qualifying studies reported results from traditional, mass univariate contrasts, but a few studies used other methodologies to distinguish brain activity associated with social interaction and appropriate control conditions, such as multi-voxel classification using a searchlight approach. See Figure 2 for full Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) details.83

The term “study” refers to results from a single sample of participants, so if an article reported qualifying results from different, independent samples of participants, each set of results was treated as an independent study. For each study, we extracted the reported 3D spatial coordinates of activation foci for every qualifying whole-brain contrast that involved social interaction compared against a control condition that does not meet our criteria for social interaction. Contrasts were annotated with sample size, type of control condition (e.g., non-interactive social engagement, interaction with a non-human partner, etc.), the behavior that was modeled (e.g., participant-initiated interactive behavior, participants’ response to interaction partner, etc.), and other characteristics to guide future research. Importantly, annotations were made at the level of the contrast, not study, thereby enabling the use of individual contrasts for sub-analyses. For example, Caruana et al.19 reported contrasts examining when participants use their eyes to guide a partner’s attention and when participants respond to the eye-gaze cue of a partner, which can be used in separate analyses of initiating and responding behaviors, respectively. Full details and annotations for each contrast can be found in Supplemental Table 1.

Activation Likelihood Estimation (ALE)

Coordinate-based meta-analysis (CBMA) using the activation likelihood estimation (ALE)84,85 approach was implemented via the NiMare package version 0.1.1 Python version 3.10.86 Coordinates reported in Talairach space were converted to MNI space using the Lancaster approach.87 ALE interprets coordinates for each activation foci as the center of a three-dimensional Gaussian probability distribution that has a full-width at half maximum (FWHM) based on the sample size of the study (i.e., increasing sample size equates to decreasing uncertainty or smaller FWHM), which was developed based on empirical estimates of spatial uncertainty due to between-subject and between-template variability.84 All foci from a single study are incorporated into a modeled activation (MA) map and the updated ALE algorithm prohibits multiple foci from a single study from jointly influencing the MA value of a single voxel at the study level, thereby reducing the impact of single studies on group-level results.88 Additionally, multiple contrasts from a study are pooled and treated as a single contrast when calculating a study-level MA map to further reduce the influence of studies reporting results from numerous contrasts. The union of study-level MA maps are used to calculate ALE values that quantify the convergence of brain activity results at each voxel.89 Following best practice recommendations, these ALE values were evaluated against an empirical null distribution derived from a permutation procedure using a cluster-level familywise error threshold (cFWE) of p < 0.05 and cluster-forming, voxel-level threshold of p < 0.001.51,90 Each permutation involves simulating random study-level data sets that have the same number of foci and sample size as the real study-level MAs, applying the cluster-forming threshold on the union of the simulated data, and recording the largest volume of the above-threshold clusters at each iteration to create a null distribution of cluster sizes.85 This procedure was repeated 10,000 times for each meta-analysis so that reported results have cluster sizes that are in the top 5% of the distribution of cluster sizes obtained from the permutations.

Primary and Secondary Coordinate Based Meta-Analyses (CBMA)

The primary CBMA (i.e., “All”) included all qualifying contrasts that met our inclusion criteria. The objective of this analysis is to elucidate brain regions commonly implicated in social interaction, across contexts and forms, by evaluating the convergence of brain activity reported across all studies meeting inclusion criteria. This included coordinates for 2,659 foci extracted from 108 studies (i.e., independent samples) from 105 articles representing 2,919 participants and 191 contrasts (Table 1A). We additionally conducted follow-up analyses to validate the results of the All CBMA. Leave-one-study-out cross-validation ensures that a single study did not drive the observed results. This involved conducting CBMA using all but one study, repeating this procedure for each of the 108 studies, and averaging together the binarized, thresholded results to visualize the spatial overlap of the iterated CBMAs. We further evaluated whether a few studies drove the clusters obtained from the All CBMA by implementing the focus counter and Jackknife functions of NiMare, which quantify the number of studies contributing to ALE clusters and the relative contribution of each study to ALE clusters, respectively.

The “Social Engagement” and “Interaction” CBMAs examined core components of social interaction by assuming that social engagement and interaction (Figure 1B and 1C, respectively) together comprise social interaction (Figure 1D). This approach enabled the identification of brain activity associated with either component by controlling for the other (see Figure 3). More specifically, the Social Engagement CBMA focuses on contrasts with control conditions involving comparable interactive task demands (i.e., similar reciprocity and/or decision-making) that occur outside of the context of real-time human partner, so that interaction is held constant, and the subjective experience of social engagement is isolated. For example, contrasts comparing gameplay with a human partner against gameplay with robot partner isolates brain activity associated with social engagement by controlling for the neurocognitive processes related to the interactive gameplay.31 Conversely, the Interaction CBMA focuses on contrasts against control conditions involving the presence of real-time human partners in non-interactive contexts, so that social engagement is held constant and the brain activity associated with reciprocal, interactive social behaviors is isolated. For instance, when participant-involved interactive behaviors (e.g., tossing or receiving the ball in a three-person game of catch) are contrasted against conditions when participants are not interacting with their real-time social partner (e.g., observing ball tosses between the two other players),91 it controls for social engagement and uncovers brain activity associated with the reciprocal, interactive behavioral component of the social interaction. Although these analyses are premised on the tenuous assumption of subtractive logic,92 they provide a crude means to evaluate the separability of brain regions associated with the components of social interaction.

The Social Engagement CBMA included the following types of contrasts: Human > Computer Interaction (control involves real-time artificial agent like a computer algorithm or robot); Real-Time > Non-Real-Time Interaction (control involves pre-recorded, replayed, or pre-determined cues/responses from social partner); Social Interaction > Solo Action (control involves similar task demands without social context, e.g., joint attention > solo attention, joint action > solo action, etc.); Social Interaction > Non-Social Motor Control (lower-level control involving similar behavioral response without social context; Figure 3B). This included 1,706 foci from 119 contrasts across 68 studies representing 1,776 participants. Because a substantial portion of contrasts in the Social Engagement CBMA utilized a common set of control conditions involving interactions with a computer or robot, we also conducted a targeted “Human vs Computer” meta-analysis using 436 foci from 53 contrasts across 33 studies representing 733 participants. Appropriate contrasts that qualified for the Interaction meta-analysis included: Social Interaction > Being Observed (control involves being observed social partner); Social Interaction > Social Observation (control involves observing social partners); Interaction > Simultaneous Non-Interactive Action (control involves simultaneous non-interactive behavior in social context); Interaction > Non-Interactive Motor Control (control task involves behaviors in a social context that are not contingent on and/or do not affect partner behavior, e.g. rule-based responding, responses hidden from partner; Figure 3C). This included 737 foci from 59 contrasts across 37 studies representing 970 participants. The diversity and greater number of contrasts used in Social Engagement versus Interaction presented challenges for making direct comparisons between CBMAs (see Table 1 for CBMA characteristics and the overlap between CBMAs).

We also conducted CBMAs that aimed to extend findings from the joint attention literature that has revealed partially overlapping brain systems involved in coordinating mutual attention, which involves initiating behaviors (directing the other’s attention through eye-gaze cues) and responding behavior (following the attentional cue made by the interaction partner; Figure 3D-E). However, no studies have systematically examined whether the brain systems associated with initiating and responding generalize to non-eye-gaze-based social interactions. To this end, we conducted separate analyses using studies that modeled behavior when participants engaged in an Initiating behavior that elicited a response from their partner (Figure 3D), and studies wherein the modeled behavior was of participants Responding to the actions of their partner (Figure 3E). These analyses excluded studies that modeled blocks during which there was a back-and-forth between participant and partner, and other studies that did not have clearly delineated initiating and responding events. The Initiating CBMA included 607 foci from 39 contrasts across 25 studies comprising 626 participants studies wherein participants gestured or made facial expressions that were imitated by partners, made offers in a game of trust or shared opinions that partners responded to, and other studies that modeled the initiating behavior of the scanned participant. The Responding CBMA included 708 foci from 36 contrasts across 23 studies comprising 625 participants wherein participants responded to offers made by another, made guesses based on hints provided by a partner, imitated or followed their actions, and other studies that modeled the responding behaviors of a scanned participant.

Meta-Analytic Connectivity Modeling (MACM) and Functional Decoding

We predicted that reciprocal social interaction would consistently engage diverse neurocognitive systems. To better characterize the brain networks and functions associated with social interaction, we conducted MACM and functional decoding analyses using each significant cluster uncovered by the All CBMA as ROIs. MACM involves using a large database of neuroimaging articles to uncover brain areas commonly coactivated with a target ROI in the neuroimaging literature. We used the 14,371 articles in the Neurosynth database version 793 and conducted MACM using the multi-kernel density chi-squared originally developed for Neurosynth93,94 implemented via NiMare version 0.1.1.86 This approach involves splitting the database into studies that report activations within a target ROI and studies that do not. It then calculates “uniformity” (previously referred to as “consistency” or “forward-inference”) maps that are estimated based on the probability of coactivation in the selected studies reporting activity in the ROI, and “association” (previously referred to as “specificity” or “reverse-inference”) maps that are calculated by estimating voxel-level probability for coactivation with the ROI across the entire database.93,94 FWE corrections were made using 1K iterations of a Montecarlo max-value permutations algorithm to create thresholded MACM association maps for each ROI, which were further used in clustering analyses (details below).

Functional decoding involves a procedure similar to MACM, but it is used to infer cognitive processes associated with a target ROI based on study features. Studies are split based on whether they report activation in a target ROI. Forward and reverse-inference probabilities are calculated for each of the features that the studies are tagged with for a given database.86 We implemented Neurosynth style decoding via NiMare that uses a similar chi-squared approach as the MACMs, and is appropriate for decoding based on larger ROIs (i.e., the All CBMA clusters) because, unlike the Brainmap decoding algorithm,95 it does not estimate probabilities based on the total number of foci in the database.86 We conducted functional decoding using the Brainmap.org database because it provides careful annotation of a curated selection of behavioral domains, paradigm classes, and other study features associated with each of the 4,226 articles in the database.96,97 We also conducted functional decoding using larger, less constrained feature spaces of the Neurosynth database that were calculated via Latent Dirichlet allocation (LDA) to create sets of 50, 100, 200, and 400 topics from the thousands of terms in the Neurosynth database.93,98 These topics were derived using data-driven topic modeling approaches applied to the frequency of every word that exists in the corpus of abstracts associated with the studies of the Neurosynth database.93

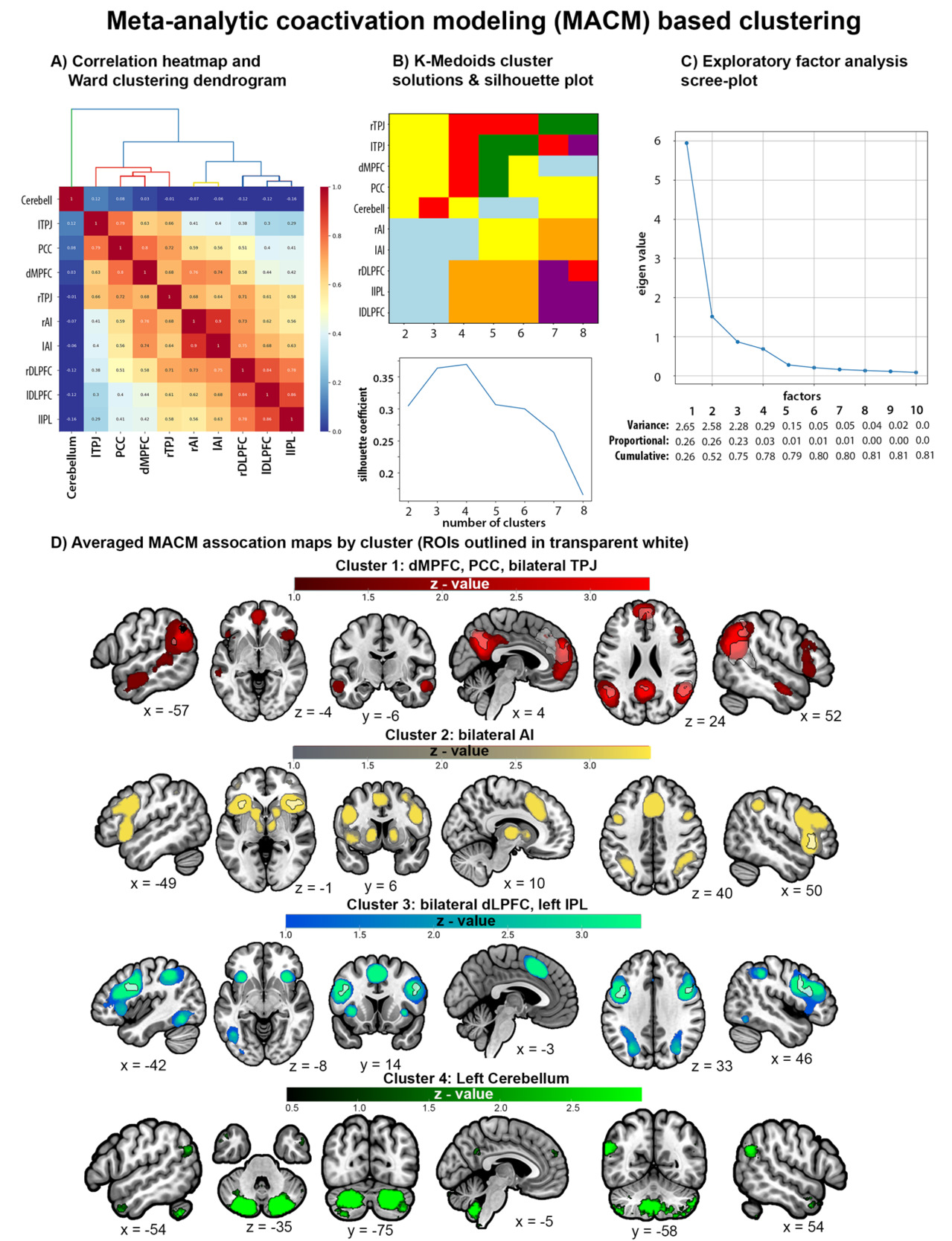

The MACM and functional decoding results for each ROI of the All CBMA were further used in meta-analytic clustering analyses. We evaluated how ROIs cluster together based on their meta-analytic connectivity by calculating pairwise correlations between each pair of unthresholded MACM association z-maps and conducted clustering on the resulting ROI by ROI correlation matrix (similar to the approach in Amft95). We also assessed how ROIs cluster together based on decoded function by calculating pairwise correlations between the ROI’s vector of reverse-inference z-values associated with the features of the Brainmap.org database, as well as for the decoded features from each of the Neurosynth LDA databases of 50, 100, 200, and 400 topics. For both MACM- and decoded function-based clustering, we evaluated and visualized the relationships between ROIs in multiple, different ways: 1) correlation heatmaps with dendrogram estimated using the Ward clustering algorithm99 on the Euclidean distances between ROIs to visualize pairwise and hierarchical organization; 2) exploratory factor analysis (EFA)100 visualized via scree plots to represent the variance associated with underlying factors; and 3) K-medoids clustering101 to visualize global solutions for different numbers of clusters and their associated silhouette coefficients – a goodness of fit measure for clustering solutions.102 K-medoids (similar to K-means) was used because it can be implemented on the pre-calculated ROI by ROI correlation matrices described above (i.e., using MACM association maps and decoded functions).101 The motivation for using different clustering approaches was to provide different, complementary approaches of exploring the relationships between ROIs. In the ideal case, similar clustering solutions achieved through different methods would provide converging evidence for the common underlying brain systems and functions associated with social interaction, but divergent results can also inform the underlying structure of relationships between ROIs. For instance, hierarchical clustering using Ward linkage is an agglomerative approach that starts locally with each ROI as a cluster and sequentially combines similar clusters until there is one, which provides information about the hierarchical relationships between nodes. K-medoids, on the other hand, is considered global approach for grouping nodes into a pre-specified number of non-overlapping clusters by minimizing within-cluster variance and maximizing between-cluster variance,95 and provides insight into the separability of groups at different levels of clustering. Clustering analyses were conducted in Python version 3.10 using custom code built on functions from various packages, including SciKitLearn (scikit-learn.org), NiMare (nimare.readthedocs.io), and Nilearn (nilearn.github.io). Full details and executable code notebooks can be found at https://github.com/JunaidMerchant/SocialInteractionMetaAnalysis.

To provide a more spatially specific understanding of the connectivity between brain areas, we also conducted exploratory MACM, functional decoding, and clustering using the 15 sub-peak coordinates from All CBMA (see Table 2 below) as seeds for 6-millimeter spherical ROIs (see Supplemental Materials: Exploratory sub-peak analyses). Unlike the primary decoding analysis, we used NiMare’s Brainmap decoder function that is more appropriate for seed-based ROIs because it accounts for the number of foci per study in the larger dataset used for decoding.86 Finally, we additionally conducted clustering based on resting-state functional connectivity (rsFC) maps for each sub-peak obtained via Neurosynth, which provides whole-brain rsFC maps based on data from 1,000 subjects.103–105 Similar to MACM-based clustering, we calculated pairwise correlations between each pair of rsFC maps.

Results

All

The All CBMA included all 108 studies and revealed significant convergence in 10 clusters: a large medial prefrontal cluster that spans dorsal and anterior portions of the MPFC (dMPFC and aMPFC) and dorsal and perigenual portions of the anterior cingulate cortex (dACC and pgACC), bilateral temporal parietal junction (TPJ), bilateral anterior insula (AI), bilateral dorsolateral prefrontal cortex (dlPFC), medial posterior cingulate (PCC) into precuneus, and left inferior parietal lobule (IPL), and left cerebellum (Figure 4, Table 2). Leave-one-study-out cross-validation indicated that the findings are not attributable to any single study, such that the spatial overlap of 95% or more of the iterated CBMAs is comparable to the original All CBMA results (Supplemental Figure 1). Moreover, focus counter analysis revealed that no less than 10 studies contributed to each of the All clusters (Supplemental Table 2) and jackknife analysis indicates that no single study contributed more than 14% toward the likelihood estimates of any All cluster (Supplemental Table 3). Given the wide range of experimental approaches, social interactive contexts, and perceptual modalities represented in the set of qualifying articles, these results strongly support a core set of brain areas underlying social interaction.

Social Engagement and Interaction

The Social Engagement CBMA isolated social engagement by using contrasts that had a non-social, interactive control condition. This analysis revealed significant convergence in 11 clusters. They included many of the same brain areas as the All CBMA, such as bilateral TPJ, bilateral AI, bilateral dLPFC, precuneus/PCC, and left IPL, as well as the ventral striatum (VS) and separable, non-overlapping dorsal and anterior MPFC/dACC clusters (Figure 5 in yellow, Table 3A). Supplementary analysis focusing on studies reporting the most common contrast of Human vs Computer uncovered a more constrained set of 9 clusters, which included right TPJ, bilateral AI, precuneus/PCC, VS, and four separable MPFC clusters: ventral MPFC (vMPFC), aMPFC, left dACC/dMPFC, and right dMPFC (Supplemental Figure 2, Supplemental Table 4). The CBMA targeting Interaction by focusing on contrasts that used a socially engaged, yet non-interactive control condition uncovered seven clusters, including bilateral AI, right posterior superior temporal gyri sulci (pSTS) into TPJ, and separable dMPFC (into posterior dACC) and aMPFC/dACC clusters that partially overlapped with Social Engagement (in green), as well as spatially separable clusters in left IFG and left pSTS (Figure 5 in blue, Table 3B).

Initiating and Responding

CBMA targeting Initiating behaviors in reciprocal social interactions revealed significant convergence in six, primarily right lateralized regions including dMPFC, dACC, right IPL, right TPJ, and bilateral AI (Figure 6 in blue, Table 4A). CBMA targeting Responding behaviors in reciprocal social interaction revealed significant convergence in six areas, including right pSTS into TPJ and right AI that overlap partially with Initiating (in green), as well as spatially separable clusters in right dlPFC, right IFG, left IFG/dlPFC, left IPL and left primary visual cortex (Figure 6 in yellow, Table 4B).

MACM and Functional Decoding

Thresholded MACM association maps for each ROI can be found in Supplemental Figure 3. MACM-based clustering was performed using unthresholded MACM association maps (i.e., “specificity” maps) to calculate correlations between each pair of ROIs. EFA suggests that a 4-factor solution had eigenvalues above 0.5 and explained 78% of the cumulative variance. Silhouette coefficients from K-medoids also suggested the best fit for 4 clusters and provided an identical 4-cluster solution as Ward. MACM maps for ROIs belonging to a cluster were averaged together for visualization. MACM Cluster 1 includes dMPFC, bilateral TPJ, and PCC, and has connectivity with other regions of the mentalizing network. MACM Cluster 2 includes bilateral AI and has connectivity with frontoparietal regions, subcortical striatal structures, and other regions of the midcingulo-insular network. MACM Cluster 3 includes bilateral DLPFC and left IPL and has connectivity with bilateral AI, poster inferior temporal regions, and other lateral frontoparietal areas. MACM Cluster 4 includes only the left cerebellum and has connectivity with other cerebellar regions, bilateral TPJ, and bilateral anterior temporal lobe (ATL; Figure 7).

Supplemental MACM and rsFC based clustering of the 15 spherical ROIs that included right IPL subpeak of the TPJ, right IFG subpeak from AI, and aMPFC, dACC, and pgACC subpeaks of the dMPFC (Table 2) were broadly consistent with the primary MACM clustering analysis in that both supported a 3-5 cluster solutions with strong connectivity between mentalizing network regions (i.e., pgACC, aMPFC, bilateral TPJ, and PCC) and distinguished different sets of fronto-parietal networks (Supplemental Figures 6-7). However, while MACM-based clustering largely recreated the groupings of the primary analysis that separated the left cerebellum as its own cluster, rsFC-based clustering revealed that the cerebellar connectivity was strongly correlated with the connectivity profiles of the aforementioned mentalizing regions, right AI, and right IPL (r values = 0.55 - 0.77). This discrepancy likely reflects biases in the reporting of cerebellar activity in the extant neuroimaging literature used for MACM.

Decoded function-based clustering was conducted by calculating pairwise correlations between each pair of ROIs’ reverse-inference z-values for the Brainmap and Neurosynth LDA database study features. Clustering analysis of Brainmap decoded functions yielded slightly different and less consistent clustering solutions than MACM-based clustering. EFA provided support for a 4-factor solution that had eigenvalues above .5 and explained 53% of the cumulative variance, while silhouette coefficients from K-medoids indicated a comparable fit for 2 to 4 cluster solutions but yielded an identical 4-cluster solution as Ward (Figure 8A-C). Clustering analysis of the Neurosynth LDA 400 decoded functions provided more consistent support for a 4-cluster solution as demonstrated by the scree plot that shows four factors have eigenvalues above .5 and explained 69% of the cumulative variance, the silhouette coefficient plot that indicates the strongest fit for a 4-cluster solution, and the identical 4-cluster solutions uncovered by K-Medoid and Ward (Figure 8D-F). This is consistent with clustering analyses of decoded functions from the Neurosynth LDA 50, LDA 100, and LDA 200 datasets, each of which provided strong support for a 4-cluster solution and yielded identical 4-cluster solutions (Supplemental Figure 4). Thus, we focused on the 4-cluster solution that is relatively consistent across the Brainmap and Neurosynth datasets (see Figure 8 and Supplemental Figure 4).

Supplemental Figure 5 visualizes the overlapping decoded functions in a Venn Diagram, and Supplemental Table 5 includes the reverse-inference z-scores for Brainmap and Neurosynth features organized by decoded clusters. They reveal that decoded functions associated with each cluster alone were relatively narrow. For example, Cluster 1 (C1) includes bilateral TPJ and PCC that are involved in imagined events (e.g., vignettes). Decoded Cluster 2 (C2) includes bilateral AI and dACC/mPFC that are related to internal regulatory processes like reward and anticipation, while Decoded Cluster 3 (C3) includes bilateral DLPFC and left IPL that are associated with perceptual-motor control processes like action preparation and reading. Decoded Cluster 4 (C4) includes only the left cerebellum which is important for timing. However, when investigating combinations of clusters that map onto the MACM clusters a broader picture emerges. For example, when combining decoded C1 (TPJ, PCC) with C2 (dmPFC) and C4 (cerebellum) we see functions associated with theory of mind, default mode, and mental state reasoning (Supplemental Figure 5; Supplemental Table 5). These regions (TPJ, PCC, dmPFC) map onto the MACM Cluster 1. Similarly, combining decoded C3 (LPFC, IPL) with C2 regions (dACC) maps onto MACM Cluster 3 and is associated with a broader set of functions related to cognitive control. MACM Cluster 2 maps well onto the regions from decoded Cluster 2 (Figure 7-8).

Discussion

The current study used data-driven investigations of extant neuroimaging literature to advance our understanding of the neurocognitive systems involved in human social interaction. Focusing on studies that involve real-time, reciprocal exchanges between participants and social partners, we had three primary aims: 1) uncover a common set of brain regions underlying diverse forms of social interactive behavior; 2) assess whether dissociable brain systems contribute to core components of social interaction; and 3) explore the brain systems and cognitive functions associated with these regions. Meta-analytic investigations of 108 social interaction neuroimaging studies revealed significant convergence in brain regions associated with social cognition (dMPFC, PCC/Precuneus, TPJ, and Cerebellum Crus1/2), frontoparietal clusters related to higher-order perceptual-motor processes (IPL and dLPFC/premotor cortex), and midcingular-insular regions associated affective processes like reward (dACC and AI).45,46,106 Thus, while social cognition in non-interactive contexts involves the subset of brain regions typically associated with mentalizing, an extended set of neurocognitive systems underlies real-time social interactive behavior.1,10 These regions are consistent with a prior meta-analysis of social interaction,45 but our findings extend prior work by demonstrating that partially dissociable neurocognitive systems may contribute to core components of social interactive behavior. Specifically, social engagement with a human (versus non-human) partner consistently implicated social-cognitive and reward system regions (VS and vMPFC), while interactive social behaviors engage regions associated with lateral attention networks (IFG and pSTS).18,107,108 Our findings highlight the importance of second-person studies for understanding the interplay of neurocognitive systems facilitating naturalistic social behavior.

Brain systems commonly engaged during social interaction

Meta-analytic connectivity modeling and functional decoding on the key regions identified from the All meta-analysis suggests nodes from four overlapping subsystems contribute to social interaction: 1) a social cognition subsystem encompassing default mode network regions including the TPJ, PCC/precuneus, and anterior portions of the MPFC that is associated with mentalizing, self-reflection, empathy, and other higher social cognitive processes; 2) a midcingulo-insular subsystem that includes bilateral AI and dACC, and is important for mediating internally and externally oriented information processing streams (e.g., those involved in emotion, reward, pain, cognitive control); and 3) a frontoparietal subsystem including bilateral dLPFC/IFG and IPL, which is associated with action observation and execution, memory, language, cognitive control, attention, and other higher order executive functions involved in reciprocal interactive behaviors, and 4) a cerebellar-TPJ network that is associated with socio-emotional processing. These networks are core components of active inference and predictive processing frameworks,109–111 including those applied to social interactive contexts.7,42,112 Their activation in social-interactive contexts may be due to the greater demands that social interactions place on predictive processing. Individuals need to make recursive predictions about both themselves and others and update models based on internal and external sensory outcomes of the actions of social partners in real-time.

Mentalizing, or Default Mode, Network

Throughout our analyses, we found significant convergence in nodes of the mentalizing network, which overlaps with the default mode network, including bilateral TPJ, MPFC, and PCC. The functional decoding analysis confirmed that these clusters are associated with social cognition and mentalizing, while MACM revealed that they are frequently co-activated with other brain areas associated with social cognition and reward processing, including the STS, anterior temporal lobes (ATL), and vMPFC (Figure 7-8). These findings support the idea that reasoning about others’ mental states is spontaneously elicited in social interactive contexts regardless of task demands.18,113 These findings are also consistent with theoretical accounts that implicate regions of the mentalizing network in active inference and predictive processing during social interactions.7,114 For instance, the 3D model outlines the representation of social information in the mentalizing network for inferring traits and states of an interaction partner to better predict their actions,114 while active inference accounts suggest that nodes of the default mode network, including TPJ, are involved in a nested deep tree search to recursively predict possible outcomes of one’s own and one’s partner’s potential actions.7 Mutual prediction theories also place regions of the mentalizing network, particularly the MPFC, as central to social interaction.43,115,116 Specifically, rodent research has identified separate populations of neurons in the MPFC that encode for one’s own behavior and behaviors of a social partner during social interaction, which leads to synchronous brain activity between social partners as predictive models align between partners.115–117

The MPFC is a multifunctional region with nodes corresponding to multiple large-scale brain networks.118 The MPFC cluster identified in the CBMA was large with subpeaks encompassing dMPFC and dACC. Our supplemental MACM and decoding analyses of dMPFC subpeaks (Supplemental Figures 7-10) are somewhat consistent with prior connectivity studies revealing dorsal-ventral MPFC distinctions.118–121 That is, we found that the aMPFC and perigenual ACC subpeaks were more associated with social cognition and emotion and connected to other regions of the mentalizing and reward networks. In contrast, the dMPFC and dACC were associated with more attentional processes and connected to AI (Supplemental Figure 8; Supplemental Table 6). The different structures of the MPFC may work together to integrate social contextual information with internal models of the self during social interactions.

Our MACM identified a separate network involving bilateral posterior cerebellum (Crus I/2) and left TPJ. Our decoding analyses highlighted associations between this network and theory of mind and empathy tasks. This is consistent with prior work highlighting a role of this region of the cerebellum in mentalizing.122–124 Like in the motor domain, the cerebellum plays a role in developing predictive models and adapting or calibrating them based on feedback. Within a social interaction the posterior cerebellum may be developing a model of one’s social partner to predict future behavior and adapting the model based on feedback from the partner.111 The posterior cerebellar region is part of the default mode network125 but showed only moderate support for meta-analytic co-activations with the DMN regions more broadly. However, we believe this lack of association is due to the low number of studies reporting the cerebellum in prior literature. That is, when conducting MACM and functional decoding analyses, which calculate likelihood estimates based on the selection of studies reporting foci within the target ROI from the Brainmap and Neurosynth databases (comprised of 7,643 and 14,371 studies, respectively), only 17 Brainmap studies (0.2%) and 96 Neurosynth studies (0.7%) reported activation foci in the cerebellum ROI. For context, 197-695 (3-9%) Brainmap studies and 1,006-3,258 (7-23%) Neurosynth studies reported activation foci for the other 9 ROIs. This lack of data is likely due to the fact that studies typically only report regions within the cerebral cortex, severely limiting our understanding of the role of the cerebellum within social interaction.

Midcingulo-insular Systems

Across our analyses, we consistently found significant convergence in the AI, particularly in the right hemisphere. The AI and dACC are considered to be major hubs of the midcingulo-insular “salience” network that is involved in the identification of important information through mediating the interplay between internally and externally oriented attentional systems.106,107,126–128 This is consistent with the MACM and decoding of our bilateral AI clusters that demonstrated connectivity with VS and other subcortical structures linked to reward and interoceptive processes, as well as frontoparietal regions associated with conflict monitoring and other cognitive control and attention processes (Supplemental Figure 8; Supplemental Table 5-6).

The AI is a key node of the interoceptive network. Part of limbic cortex, the AI receives input from internal systems and uses this to generate predictions of the effect of different possible actions on one’s bodily state and to adjust accordingly.110 The demand on these systems is greater in an interactive context as social partners attune to the internal state of one’s social partner.7 Indeed, considerable research has implicated the AI in empathic processes that underlie our ability to personally experience and understand the thoughts and feelings of others.128–131 Evidence from second-person studies have been used to characterize the AI and dACC as components of the social attentional system that monitors for and detects social misalignment.132 For instance, Koike et al. demonstrated heightened activity in the right AI when participants attempted to initiate joint attention via eye-gaze cues directed at a partner, which became correlated with the partners’ right AI activity when joint attention was achieved.133 Research has also demonstrated AI’s contributions to social alignment in other cognitive domains, such as coupling between speakers and listeners134 and emotional alignment between partners watching movies together.135 Thus, AI’s role in mediating between internal and external processes may be important in social alignment during interaction.

Frontoparietal systems

We also found significant convergence in different lateral fronto-parietal structures throughout our analyses, including dLPFC, IFG, and IPL clusters that extended into the angular gyrus and pSTS. In line with research indicating that midcingulo-insular network has strong functional connections to frontoparietal nodes of attention and cognitive control networks,107 our MACM- and decoding-based clustering revealed that bilateral AI and frontoparietal clusters were more strongly correlated with each other than with regions associated with mentalizing (TPJ and PCC; Figures 7-8). Moreover, these frontoparietal clusters overlap with parts of the human putative mirror neuron system (MNS) that links action observation and execution, and is thought to underlie our ability to understand others’ intentions through embodied simulation.46,136–138 Second-person studies suggest that the MNS is important for the dynamic coupling that occurs between interacting individuals, such as the perceptual and behavioral coordination involved in joint actions, as well as linguistic and social cognitive coupling that creates shared understanding between speakers and listeners.36,37,46,57 This interpretation is consistent with recent reviews indicating that regions of the MNS and midcingulo-insular network are part of an intermediary social cognitive sub-system that integrates motor/perceptual, limbic, and higher-order social cognitive processes.44,128,139 Together, these findings suggest that the frontoparietal regions interface with other neurocognitive system to facilitate cognitive and behavioral coupling between individuals during interactive social behavior.

Social Engagement & Interaction components are associated with distinct regions within mentalizing and reward networks

The Social Engagement and Interaction components identified dissociable regions within the mentalizing network. For example, we found anterior, ventral clusters of the TPJ and pSTS associated with Interaction that were separable from the dorsal-posterior TPJ clusters associated with Social Engagement. Different higher-order cognitive processes have been attributed to the TPJ, including attentional re-orienting140,141 and mental state reasoning.142–144 Studies parsing functional subdivisions of the TPJ suggest that anterior portions into pSTS underlie attentional processes associated with mentalizing based on overt cues (e.g., actions and behaviors). However, posterior TPJ into IPL is associated with inferring covert mental states.47,144–146 This is consistent with second-person studies that demonstrate the sensitivity of the TPJ to perceived human-likeness and intentionality of interaction partners,31–34,62 and non-invasive brain stimulation studies that provide causal evidence for the role of the right TPJ in social interactions, such that excitatory stimulation enhances social abilities like perspective-taking, while disruptive stimulation hinders strategic social behavior.147–150 These functional attributes have led to recent proposals suggesting that the TPJ/IPL is part of a social attention system that is important for monitoring and aligning with social interaction partners.132,151 Thus, the TPJ subdivisions likely contribute differentially to social attention, mentalizing, and social cognitive alignment during social interaction.

We also found cortical midline differences between Social Engagement and Interaction. Both CBMAs yielded separable anterior and dorsal MPFC clusters that partially overlapped across CBMAs, but Social Engagement was associated with a bigger cluster with a larger peak z-value in aMPFC, whereas Interaction was associated with a bigger cluster with a larger peak z-value in dMPFC (that overlapped partially with both Social Engagement MPFC clusters). Moreover, PCC/precuneus only emerged for the Social Engagement and Human vs Computer CBMAs. Numerous lines of evidence indicate that cortical midline structures are involved in monitoring and integrating information about the self and others.152–156 Human and non-human primate studies have demonstrated that dMPFC and PCC/precuneus are involved in other-oriented social cognitive processes, like social evaluations, while anterior and ventral portions of the MPFC have been linked to processes that are self-relevant, such as self-evaluations, affective experiences, and reward processing.119,152,153,157 Second-person fMRI studies further suggest that MPFC and PCC/Precuneus may track subjective experiences during social interaction.80,91,156,158 Co-viewing emotional stimuli with others (versus solo viewing) elicits activity vMPFC and aMPFC and increases intersubject synchrony in dMPFC,60,80,135 while PCC/precuneus activity increases over the course interactions with human (versus robot) partners and during high (versus low) frequency human interactions.156,158 Together, this suggests that dMPFC may be important for real-time tracking during Interaction, while aMPFC and PCC/precuneus reflect the subjective feeling of Social Engagement during social interaction.

This interpretation is supported by the fact that two major hubs of the reward and motivation system, the VS and vMPFC159,160 showed significant convergence in the Social Engagement and Human vs. Computer contrasts. Prior work has proposed that the reward system plays a role in the subjective experience of engaging with a perceived human versus computer partner,50 and may underlie the intrinsic motivation to socially engage with others.25,158 For instance, the VS and vMPFC is associated with social interaction enjoyment, the desire to share personal information with others, and self-reported increases in positive emotion when co-viewing stimuli with a friend.17,80,158,161 Thus, the VS and vMPFC likely play an important role in how social environments motivate people.

Initiating and Responding

The Initiating and Responding results only partially support models from joint attention research that suggest initiating social interaction involves anterior medial and lateral prefrontal regions involved in volitional, goal-directed processes, while responding behaviors involve posterior parietal and temporal regions associated with social perception and bottom-up attention.19,20,162 Overlapping clusters in the right TPJ/pSTS and right anterior ventral IFG into AI revealed in Initiating and Responding have been reliably implicated in eye-gaze based joint attention studies.20,49 The dACC/dMPFC cluster uncovered in the Initiating CBMA also aligns with joint attention studies and is consistent with second-person studies that have demonstrated the MPFC’s role in guiding strategic behavior and assessing the accuracy of an implemented behavioral strategy during social interaction.163,164 The Responding CBMA revealed dissociable clusters in left IPL, bilateral DLPFC and primary visual cortex, suggesting top-down attentional and perceptual processes involved in responding to others’ behavior.108,165 However, Responding did not uncover other posterior midline and lateral regions identified in joint attention work, suggesting that they may be driven by the demands of eye-gaze based attentional cues. Although preliminary, these findings provide a broader perspective on the processes involved in Responding to and Initiating interactive social behaviors.

Limitations and Conclusion

Despite aiming for the most exhaustive search of extant socially interactive neuroimaging studies, this set of studies are not representative of all social interactive contexts and forms. For instance, the majority of included studies used computer interactions as a control condition, biasing our findings toward social engagement. Moreover, CBMA approaches are well-suited for reporting of mass-univariate contrast results, which precludes other analytical approaches for investigating fMRI data, such as functional connectivity and inter-brain analyses. Thus, our findings focus on intra-individual neurocognitive processes engaged by a person when in a social interaction, rather than dynamic, inter-individual processes that are better measured by hyperscanning and inter-brain analyses.166 This is reflected in our discussion of the results which tacitly imply a modular view of how the brain works. Current theories in neuroscience suggest a more integrative account that does not assign specific functions to individual regions or networks per se, but examines the computations emerging from the chorus of brain regions playing together.167 Furthermore, the MACM and resting state analyses provided “task-free” measures of functional connectivity that may not reflect social interaction-specific patterns of functional brain organization. Thus, additional research using non-invasive brain stimulation, targeted second-person neuroimaging experimentation, and advanced computational modeling techniques are needed to validate and refine the conclusions made from the current results. Finally, it is worth noting that the specific grouping of contrasts used in our sub-analyses may not be accepted by all readers, and inherent in the meta-analytic approach is a post-hoc grouping of contrasts. Therefore, we provide access to all the tags and notes on why each contrast was categorized in the way it was, as well as the code to quickly re-create the input text files for running follow-up analyses.

Despite these limitations, our findings provide important novel insights on the neural bases of social interaction by identifying the common neurocognitive systems underlying diverse forms of social interaction and subsystems that relate to core components of social interactive behavior. Our findings revealed the involvement of several large-scale brain networks that go beyond what has been reported in non-interactive social neuroscience studies. This supports theories that propose social interactions are a central organizing principle of human brain function.8,16 Future research can extend these findings to investigate conditions characterized by social interaction challenges, and aid in the development of artificial intelligent systems.168

Data and Code Availability

The data and code used to implement all analyses, as well as executable examples notebooks can be found at https://github.com/JunaidMerchant/SocialInteractionMetaAnalysis.

Author Contributions

JSM and ER conceptualized and supervised the project. NT, JSM, and ER designed the search strategy. NT implemented the systematic literature search. SG oversaw the data management. SG, EE, RH, & JSM conducted article screening, data extraction, and conceptualized sub-analyses. JSM wrote the code and implemented data analysis. JSM and ER drafted the initial manuscript, and all authors revised the manuscript.

Funding Sources

Research reported in this publication was supported in part by the National Institute of Mental Health of the National Institutes of Health under Award Number R01MH112517 and R01MH125370 (awarded to ER). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflicts of Interest

The authors declare no conflicts of interest.

.png)

_and_interaction_(blue)_meta-analyses_and_their_overlap_(green).png)

_and_responding_(yellow)_meta-analyses_and_their_overlap_(green).png)

.png)

_and_interaction_(blue)_meta-analyses_and_their_overlap_(green).png)

_and_responding_(yellow)_meta-analyses_and_their_overlap_(green).png)