Introduction

The field of neuroscience is still in a fledgling stage. New theoretical perspectives continue to emerge, due in large part to our unprecedented ability to image the brain at high spatial and temporal resolution. Not only we can now non-invasively track whole-brain activity in awake humans with layer-specific and sub-second MRI sequences,1–5 but we can also causally manipulate and then measure multiple neuronal populations at once in model organisms.6–8 In parallel, the growing influx of researchers from quantitative disciplines – such as maths, engineering and physics – has facilitated the development of innovative modelling and analytical frameworks that have allowed for mechanistic insights into healthy and non-healthy neural states.9–15 Owing to its interdisciplinary nature, neuroscience thus continues to be shaped by diverse contributions across a plethora of fields.

Unfortunately, however, such contributions (and their resulting insights) remain clustered within neuroscience’s different subfields (e.g., micro- vs macro-scale, cognitive vs computational).16 While within-field specialisation is necessary to navigate the complexity of our brain’s multi-scale multi-level organisation – facilitating deeper perspectives at each scale – a coherent framework is equally important for effectively integrating across these fragmented perspectives. What is standing in the way of the development of this richer ontological clarity?

A cursory read of the literature across subfields shows that our conceptual fragmentation is driven in large part by inherent differences in the terminology used, such that communication is typically limited between different sub-fields.16 This is perhaps unsurprising, as the questions asked in each subfield are intimately related to the types of data that each subfield analyses, and the methods that are typically used to frame the data into testable hypotheses. In other words, our understanding of how the brain works (i.e., our ontology) is inextricably linked to our idiosyncratic methods (i.e., our epistemology).

In this short perspective, we will argue that this epistemological divide across neuroscientific subfields is a conceptual hurdle that, once overcome, will foster the rapid maturation of our collective capacity to understand the brain.

A Concise Examination of Neuroscience’s Epistemological Divide

Throughout history, epistemological divides have been prevalent across all areas of science. Take measurement units (e.g., meter for distance, kilogram for mass) as an example. Within France – where the metric system was born – there was significant disagreement over the definition of each measurement unit.17,18 This epistemological divide motivated, in the late 18th century, the French National Assembly to establish new country-wide definitions, ultimately unifying the country’s measurement system. It took however more than 100 years for this consensus to become universally adopted.17,18

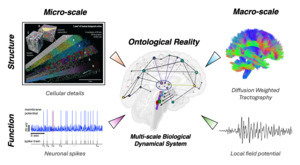

Neuroscience, as a new emerging field, is currently experiencing this rite of passage albeit a few centuries later. The neuroscientific epistemological divide arises from different subfields adopting different neuroscientific modalities, which rely on different epistemological processes and thus on inherently different assumptions. As such, across modalities, strikingly different kinds of conclusions regarding the functional organization of the brain can arise (Figure 1).

Perhaps the most obvious differences come when we consider the scale at which the brain is interrogated. Individual neurons show sparse, all-or-nothing action potentials (or ‘spikes’) – when pooled at a relatively coarse scale, these action potentials all smooth out, leaving a more continuous readout that is better interpreted as the mean firing rate of each population. While there is still no consensus, the BOLD signal has been more closely linked to these pooled local field potentials than massed spiking activity.19 However, there is also evidence that optogenetically driving spikes in pyramidal neurons in vivo also causes a large positive shift in the BOLD signal,20 suggesting a tight correspondence between spiking activity and blood flow.21 Given the differences in scale, it is perhaps unsurprising that opposing ontological conclusions have been reached from experiments conducted at either scale. For instance, the sparsity of neural spiking has led to the hypothesis that the brain is organised to maximise efficiency,22 whereas the smooth nature of whole-brain recordings naturally suggest that the brain is organised so as to maximise redundancy.23 This ontological confusion can be resolved by interrogating neural time series across scales, confirming the intuition that a cross-scale architecture can indeed balance both of these features in a ‘best-case’ scenario for optimal information processing.9

Another obvious way in which our epistemology shapes ontology is through a focus on either the activation/recruitment of different neurons or via the coordinated interactions amongst networks of neurons. Approaches that attempt to identify the most statistically extreme regions associated with a particular task (e.g., Statistical Parametric Mapping24) naturally lead to conclusions that assume that localised neural activity is associated with induced differences in behaviour. In contrast, methods that first identify patterns of covariance between regions (such as those based on Pearson’s correlations25 or Principal Component Analysis26) instead identify pairs of regions (or pairs of pairs of regions) that are similarly considered to be responsible for the dependent variables interrogated in a given experiment.

Importantly, these diverse windows into brain function (i.e., within-field specialisation) are ideally complementary: no one method/approach is inherently better/worse than any other. However, we wish to draw attention to the inescapable fact that the conclusions that arise from these various perspectives are often irreconcilable, despite the fact that they are all studying one and the same system. Much like the parallelism we drew above for the metric system, we are all interested in quantifying the brain’s functional activity, but we are using different types of recording modalities and failing at successfully translating across them. So, what can be done?

Bridging the epistemological divide is no small feat. In our opinion, the points of tension that arise from the characterisation of the brain from different empirical viewpoints will continue to arise as technology keeps improving and will keep motivating future research. It is through an iterative dynamic process of trying to harmonize across all these different perspectives that, with time, neuroscience will also reach a universal consensus across ontologies and epistemological processes. In the next section, we provide some early ideas about how we might begin this process towards integrative neuroscience.

How to Bridge Neuroscience’s Epistemological Divide

From our perspective, integrative neuroscience will progressively emerge from adopting an interdisciplinary modus operandi, from setting objective benchmarks between subfield-specific epistemological processes, and, in the interim, from leveraging readily available tools able to map out the diverse neuroscientific space.

Interdisciplinary Training and Exposure

Key to solving the neuroscientific epistemological divide is the creation of effective collaborative links between specialists in different sub-domains. However, this push towards integration across subfields – if taken to its extreme – raises another, equally unsavoury possibility: if we integrate, but lose specialisation, then we run the risk of diminishing the unique utility of work in each area to the point that the insights gleaned lack construct validity. That is, our measures may no longer match the object they are designed to describe and can be equally produced by other sub-fields – promoting redundancy and thus stifling progress and innovation. So, how can we build integration and segregation at the same time?

There is almost certainly no better antidote to our conceptual confusion than the promotion of inter-disciplinary training as part of the neuroscience curriculum.27 However, this challenge is far easier to accomplish in theory than in practice. For one, it takes a substantial amount of time to become proficient in a specific sub-domain of neuroscience, and time is a limited resource for both students and group leaders alike. Secondly, a student that specialises in an area will naturally raise scientific questions that are out of the comfort zone of the senior members of their group that may instead all be proficient in another subfield. While potentially exciting and novel, it can also mean that the unwitting student may fail to identify potential pitfalls that an experienced scientist fully specialized in that area might otherwise spot easily. This can obviously lead to suboptimal outcomes. Finally, there may simply not be ample local access to the areas of speciality that a given student may wish to advance their skillset, due to academic traditions present in our universities.

While admitting that each of these factors is a serious concern, we do see a lot of promise in starting to implement interdisciplinary training online, thanks to the profound international nature of the neuroscientific community and our strong reliance on the internet.28 In our case, perhaps due to our location so far away from much of the rest of the world’s drama and politics, Australian and Kiwi neuroscientists are forced to identify effective ways for collaborating with experts in domains that are often fundamentally non-local. As such, in our group, we have found virtual communication (via email or social media) to be a surprisingly effective way to identify potential collaborators across different subfields. This exercise of interacting with scientists that utilise diverse modalities and rely on different ontologies is our attempt to bridging neuroscience’s epistemological divide: we need well-defined questions that can be clearly translated into that specialist’s area of expertise. We also promote and engage with interdisciplinarity via online journal clubs, where we regularly invite trainees in adjacent fields, whose papers catch our attention, to present their work to our group. Importantly, there is, of course, no substitute to in-person interactions, so attending conferences remains a crucial part of this process too, as are targeted visits to the location where potential collaborators work. Either way, a commitment to inter-disciplinary engagement is absolutely crucial if we want to mature our burgeoning science.

Along these lines, another potential mechanism for making effective ontological progress would be to explicitly design multi-disciplinary conferences, workshops and journal articles. Here, a common question can be posed: for instance, what is the role of the thalamus in modulating neocortical dynamics? The many and varied answers that can be given to this question will undoubtedly vary according to the unique descriptions of domain experts. Some might comment on the anatomical relationships between the structures, others on the impact of optogenetic inhibition of specific cell types, and yet others on the nuanced impact of MRI parameters on subcortical signal-to-noise ratios. Each of these perspectives has the potential to provide crucial information to the ultimate answer, however it does so in a relatively siloed way within its unique epistemological umwelt, making integration inherently challenging. The necessary step is therefore to ultimately corral these differing perspectives into a single coherent format that captures the essence of the single answers, while retaining domain expertise. This places the impetus on the development of a shared ontology, and will depend critically on whether we, as a field, value and support the training of inter-disciplinary specialists that can facilitate effective inter-disciplinary conversations.

Objective Benchmarks

While encouraging interdisciplinary exposure is a key step towards integration in neuroscience (similar to the need of understanding all the different ways measurement units were defined in the 18th century), it is also necessary to come to an objective agreement as to how to benchmark the various epistemologies of brain function against one another.

Our field has become a bastion for sharing data and explicit code, but this has not yielded ontological clarity. One solution could therefore be to leverage open data (such as the Human Connectome Project29 or UK Biobank30) and computational analysis toolboxes (like the Brain Dynamics Toolbox31 or The Virtual Brain32) to generate a centralised dictionary/repository of measures used in the literature across ontological perspectives – much like the descriptive idea behind Neurosynth for cognitive neuroscience.33 In doing so, any new method could be submitted to this centralised repository, calculated on the benchmark data, and the results then compared to a host of other measures. Such a process is a major strength of computational modelling approaches, which require explicit construction and are extensible to any analytic approach that could be similarly applied to actual neuroimaging data.34,35 This is not intended as a bioinformatics ploy to remove the need for explicit hypothesis creation and testing,12 which we maintain are irreplaceable mainstays of modern neuroscience, but rather to provide a semi-objective comparison of each of the apparently different methodologies with one another.

What kind of ontological clarity might emerge from this process? Objectively identifying patterns of overlap across functional neural measures will demonstrate how these measures, despite their different implementation, are often sensitive to similar features of the data and it will further help disentangle the link between ontology and epistemology. A clear example from the modern neuroimaging literature is that methods that are designed to identify compressed, low-dimensional representations of neuroimaging data often covary with other measures,10,36,37 despite major epistemological dissimilarities in their construction. An important caveat of this process, however, is the potential risk of yielding “false positives”. It could in fact be the case that outcome measures do not covary for reasons that are ontologically interesting. For instance, it is possible that certain measures could fall prey to similar aspects of measurement noise, and hence covary in the imaging benchmark dataset. Alternatively, there could be trivial reasons that two measures vary on the modelling dataset, but for this to be due to limitations in our current capacity to effectively model the nervous system in silico. Nonetheless, each of these possibilities point to the fact that it is the process of engaging with this approach that matters more than the specific outcomes themselves.

A Map of Neuroscience

Thus far, our solutions (i.e., interdisciplinary exposure, objective benchmarks setting) require the active engagement of the whole neuroscientific community and therefore will be attainable over a relatively long timescale. So, what can be done in the shorter term?

Much like Neurotree,38 neuroscience would benefit from a map outlining how different subdomains cohere together. Such map would serve as a foray into the many possible ways subfields could collaborate (or not) with one another. It would provide a scaffold and starting point for longer-term solutions, such as the ones proposed in this piece. Additionally, it would help newcomers to the field build an “allocentric” perspective from which to view new papers, ideas and concepts across the neurosciences.

What would this map look like? How could we construct it? Fortunately, attempts at creating cohesive maps have been taking place within specific subfields. In the time-series space, for instance, time-series metrics have been corralled from a broad spectrum of scientific disciplines into a single common framework providing a quantitative mapping between distinct fields.12 However, such approaches have been concept-specific and mostly data-driven. As such, they have been devoid of shared hypotheses and of integrated conclusions, ultimately moving us away from our desired ontological mapping.

A potential solution to creating a more generalisable mapping could be to infer relationships amongst scientific outputs, perhaps via crowd-sourcing. Examples of such metrics could be: the similarity of citations between published papers,16 the content of abstracts from major neuroscience conferences, or the creation of approximate timelines of different prominent fields. Irrespective of the particular method used, the identification of the network as a whole would be undeniably useful for newcomers to the field, who otherwise would struggle to understand the potential ontological utility of the approaches used in different sub-fields.

Regardless of the approach taken, a comprehensive map of neuroscience, that visualises how different subfields intersect, would lay the foundations for resolving the epistemological and ontological divides that challenge our field.

Conclusion

In conclusion, we hope this piece serves as a conversation starter among neuroscientists from different subfields, at different career stages and from different backgrounds, towards implementing concrete solutions to bridge the epistemological (and ontological) divide in the neurosciences. Our goal here was to normalise this divide as an inherent evolutionary step of any scientific discipline and identify ways to help neuroscience mature as a field. Together, by embracing an integrative approach, we will, at best, develop a richer, more nuanced understanding of how the brain works, how it fails in different diseases, and (hopefully) how we might treat it. At the very least, we will have fun figuring out more precisely why we disagree.

Funding Sources

The authors acknowledge funding from the Australian Research Council (10.13039/501100000923) and from the Canadian Institutes of Health Research (CIHR, FRN #MFE-193920).

Conflicts of Interest

The authors declare no conflicts of interest.